Automation: techies love it because it frees up their increasingly limited time, and leaders love it because it helps them get better ROI on both technology and human resources. So why aren’t we just automating everything? Because security automation can still be difficult due to the data we have to work with and the various schemas it comes in. Simply put, data schemas are a way of organizing, relating, and containing data. They can be thought of as the languages that our technologies speak; just as humans speak many languages, the same is true for technology. But why is this, and how does Swimlane Turbine address this data challenge?

Let’s take a quick look back at the history of security operations (SecOps).

Logs: We’ve Seen This Movie Before

As security operations center (SOC) practitioners, one of our top concerns has always been visibility, and that meant getting logs from everything. However, while we had a standard, proven, and well-documented protocol for moving logs around (syslog) that helped us get those logs where we wanted them, what we did NOT have was a standard format for logs. Every developer of every application, whether it related to security or not, could put their logs into whatever format they felt like; they only had to TRANSMIT via the syslog protocol, which they nearly always did.

So, getting the logs from the source to the aggregator, whether it was a syslog collector or later on, a SIEM system, was simple. But once it got there, we were dependent on either built-in log parsing logic to automatically put the relevant data into the meaningful fields, or we had to write those parsers ourselves. If we didn’t, we couldn’t make sense of what we were seeing, and that made the data cumbersome and nearly useless.

SOAR: The More Things Change…

We still see this challenge today with SOAR platforms, because they’re doing the same things with the same kinds of data, but with enhanced and extended purposes. In the past, we just wanted to pull and organize “structured data,” i.e., logs. Today, the problem entails further challenges because we aren’t just pulling structured data and reading it; we’re also dealing with increasing amounts of what I would call semi-structured data via a myriad of REST APIs, plus we’re ingesting via webhooks, as well. Keep reading below to hear some of the ways that AI-enhanced security automation platforms are different, and helping to address this classic SOAR and SOC challenge.

Dealing With Signals…

Automation requires some sort of “signal” to trigger additional actions. Signals in SecOps are usually alerts of some sort, like those that originate from endpoint detection systems, SIEM systems, next-gen firewalls, or cloud security systems. However, signals can take other forms, such as vulnerability findings, attack surface events, OT response alerts, or even process-oriented events like new user requests for identity provisioning and new build requests that require application scanning.

The trouble with these signals, just like our old logs, is that they’re written by disparate developers with different requirements and no specific standard or framework. As a result, SecOps teams often face the same kinds of challenges in dealing with them as they look to automate: what is important in this blob of data I just received?

…And Layering On Actions

What’s more, we’re often pushing data and even actions back to a different set of REST APIs or webhooks. Just like with syslog for logs, they all standardize on JSON-over-HTTP as the primary transfer mechanism, but each system keeps their own unique endpoints, fields, and data format expectations. That means that now we not only have to parse out data inbound from source tools, but also properly parsed and formatted outbound as well, so we can take effective and efficient actions with other tools that are critical parts of our process and its workflows. If the receiving tool can’t understand what we’re sending, it will simply error out or worse…”garbage in, garbage out,” indeed.

How Can Turbine Help?

So, what can you find in Swimlane Turbine that helps builders and orchestrators more quickly and easily overcome these constant data format challenges?

Vendor-Specific Components

Components represent a major upgrade within Turbine Canvas, the low-code playbook building studio. They have an increasingly important role to play, especially when we’re talking about ingesting data. Swimlane has always focused on creating the necessary connectors and integrations for any tools our customers use. We’re taking that commitment to the next level with vendor-specific components.

Think of these vendor components as connectors-on-steroids. Sure, they provide the connector and built-in actions to interact with those systems, but they also include a set of playbooks that apply back-end business logic to automatically extract, transform, format, and map the data in ways that make it quickly and easily available anywhere in Turbine. Thus, for the majority of common security applications and platforms, data ingestion and integration can be accomplished in minutes rather than hours or days.

Simple Native Actions for Data Manipulation

Swimlane Turbine’s native actions have always provided game-changing abilities when it comes to dealing with data, and we stay true to that with our data manipulation native actions. Turbine’s primary transformation capabilities are built on JSONata, which can sometimes be tricky to navigate, but we have GUI-fied it so that even those who aren’t familiar with the ins and outs of extended JSONata transforms can still build them quickly and easily.

Hero AI Schema Inference

Swimlane Hero AI is a collection of AI-enhanced innovations available within the Turbine security automation platform. Schema inference is one of the first features where Swimlane applied artificial intelligence in ways that really level-up the game for using AI-enhanced security automation as a true force multiplier directly within an application. Let’s say you have a new data input from an action but there isn’t a component available, so it just comes in as a raw blob of JSON.

Hero AI schema inference knows what fields and field types you use in Turbine, then can read that incoming JSON and automatically suggest mappings that match the relevant input fields into the schema fields you’re already dependent on. Even better, it does this without you asking directly for help.

Hero AI Text-to-Code Chatbots

Chatbots provide a proven capability to quickly and easily integrate the power of AI into everyday work. They are particularly helpful when it comes to simplifying complex user needs. Schema mapping is one thing, but often we as builders still need to be able to take more advanced actions with the data we’re handling in our processes. To make this easier, we leverage Hero AI’s text-to-code chatbots in Turbine to allow users to ask for specific data transformation and format needs, but translated into Python.

For example, the user can simply ask the Hero AI chatbot: “Write a transform in Python that extracts the fields ‘src_addr’, ‘dst_addr’, ‘application’, and ‘ts’ from an input, format the contents of the ‘ts’ field into an ISO 8601 format, and verify that the ‘src_addr’ and ‘dst_addr’ fields are not RFC 1918 addresses.” The chatbot would then respond with the relevant Python code for the user to apply as needed.

Never Settle for The SecOps Status Quo

You need your security automation platform to be as flexible as you need, but also as simple as possible. This balance is important so that more of your staff can take advantage of it. This also requires dealing with flexible and diverse datasets in a way that is likewise simple and manageable. The combined features and functionality outlined above allow Turbine to act as the Rosetta Stone of SecOps, enabling our customers to strike the perfect balance of flexibility and simplicity. Our commitment to Turbine’s low-code paradigm proves that our platform is never merely “good enough”.

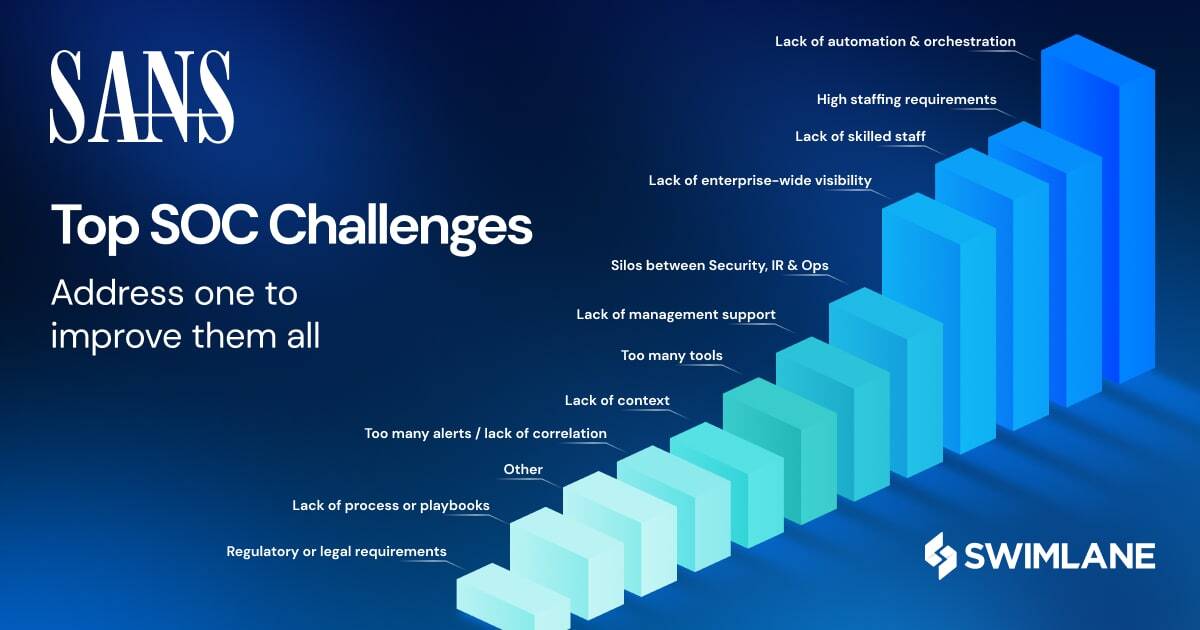

SANS Security Operations Center Survey

Security Operations Centers (SOC) continue to evolve and mature their capabilities, architectures, and management strategies as the threat landscape evolves. The 2024 SANS SOC Survey, written by Chris Crowley, analyzes data from global SOC teams to report on common challenges and how leaders intend to recalibrate near and long-term plans.