Better Angels of AI Agents: Ethical Cybersecurity in Autonomous Systems

The hum of servers, the flicker of screens, the relentless march of technological progress – it can feel as though we are hurtling into a future shaped by forces beyond our control. Yet, as we stand on the precipice of the AI agent revolution, we have a profound opportunity to steer its course. This isn’t just about building smarter machines; it’s about invoking what Abraham Lincoln famously called “the better angels of our nature,” those nobler impulses that guide us toward cooperation, empathy, and collective good. Just as Steven Pinker meticulously detailed how humanity has, over millennia, increasingly embraced its better angels to reduce violence and elevate reason, we must now consciously imbue our autonomous AI agents with the very same principles, thereby reducing costly errors and cybersecurity risks.

The Rise of Autonomous Intelligence: A New Chapter in Cybersecurity Progress

Imagine a world where your AI agent proactively manages your complex cybersecurity projects, not just reminding you of deadlines, but analyzing security data, delegating incident response tasks to other agents, and even drafting initial threat reports – all while you focus on the creative, strategic core of your security operations.

The Promise of AI Agents

This isn’t a distant fantasy; it’s the promise of AI agents. Unlike reactive AI tools that simply respond to your commands, these are autonomous AI entities capable of understanding security goals, planning multi-step solutions for threat detection and response, learning from their environment, and executing actions with minimal human intervention. They are, in essence, digital apprentices, capable of evolving into trusted partners in the Security Operations Center (SOC).

The Future of AI: A Shift from Passive to Proactive Agents

This shift from passive tools to proactive collaborators represents a monumental leap. It’s like moving from a calculator (reactive) to a cybersecurity analyst (proactive), but with vastly greater scope and capability. The future of AI is intrinsically linked to the development of these agents, promising to redefine security operations, accelerate threat intelligence, and personalize defense strategies in ways we’re only beginning to fathom.

The Ethical Imperative: Nurturing Our “Better Angels” in Code for Cybersecurity

However, with immense power comes immense responsibility. Just as Pinker illustrates how societal mechanisms like justice systems and commerce have amplified our better angels, we must design similar “mechanisms” into our AI agents for cybersecurity.

Without a deliberate, human-centered approach, these powerful tools could inadvertently amplify our lesser angels, biases in threat detection, inefficiencies in incident response, and even unintended harm through misconfigurations or false positives. This is where the concept of ethical AI principles becomes not just theoretical, but absolutely critical for the development of AI agents in the cybersecurity domain.

Consider the “sympathy” Pinker describes as one of humanity’s better angels, as our capacity for empathy and care. How do we instill a digital equivalent in an AI agent operating in a SOC? It begins with:

Transparency and Accountability: Building Interpretable AI for Security

If an AI agent makes a decision that impacts an organization’s security posture or a significant incident response, we must understand why and how it arrived at that decision. This isn’t just about logging data; it’s about building interpretable AI systems where the logic behind threat assessments and mitigation actions is accessible and auditable by human analysts.

Human-in-the-Loop Design: The Essential Role of Human Oversight

Even the most sophisticated AI agents in a SOC need a watchful guardian. Just as Pinker argues that reason allows us to step back from impulsive actions, human oversight provides the necessary “pause button” and moral compass for autonomous security systems. We must design interfaces that allow for easy intervention, course correction, and the ultimate authority of human judgment in critical security decisions.

Fairness and Bias Mitigation: Crafting Responsible AI in Cybersecurity

Our digital world is riddled with historical biases in data, and if not meticulously addressed, these biases will be ingested and amplified by AI agents learning from existing threat intelligence or security logs. Crafting responsible AI in cybersecurity involves proactively identifying and mitigating these biases, ensuring that agents consider all systems and users with equitable fairness, and do not unfairly target specific groups or applications.

Purpose-Driven Development: Directing AI Capabilities for the Greater Good

We must consciously direct the incredible capabilities of these agents toward the greater good of cybersecurity. This means fostering AI for good initiatives within security, designing agents that tackle complex global challenges such as ransomware, advanced persistent threats, and protecting critical infrastructure. Imagine AI agents optimizing threat hunting in real-time or accelerating the discovery of new vulnerabilities.

Practical Steps for a Human-Centered AI Approach in Cybersecurity

Embracing the “better angels” of AI agents isn’t a passive hope; it’s an active endeavor requiring collaboration across disciplines in cybersecurity.

- For Developers and Companies: The responsibility begins at the drawing board. We must embed ethical frameworks into the very architecture of AI agent development for security. This includes rigorous testing for unintended consequences in automated responses, prioritizing robust security to prevent misuse or compromise of the agents themselves, and creating intuitive control interfaces that empower security analysts rather than intimidate them.

- For Regulators and Policymakers: The global nature of cyber threats and AI demands international cooperation. Flexible yet firm AI governance frameworks are essential, encouraging innovation in security solutions while safeguarding against risks posed by autonomous systems. Investing in AI safety research is crucial, as it entails exploring technical solutions as well as examines the societal implications of widespread autonomous security systems.

- For Users and the Public: Our role is to be informed and engaged. We must demand transparency and control over the AI agents we interact with, understanding their limitations and advocating for their ethical deployment in protecting our digital lives. Our collective voice can shape the direction of this technology.

A Call to Conscious AI and Human Coexistence in Cybersecurity

The “better angels of our nature,” as Pinker so eloquently argues, are powerful forces that have slowly but surely guided humanity towards a more peaceful and prosperous existence. Now, as we stand at the threshold of the AI agent era in cybersecurity, we have a profound opportunity, and indeed a moral obligation, to imbue these powerful new entities with the same noble impulses.

The future of AI agents in cybersecurity is not pre-ordained. It is a canvas upon which we, as designers, policymakers, and security professionals, will paint. Let us choose to paint a future where human-centered AI is not just a buzzword, but a guiding philosophy. A future where AI agents are intelligent and also wise in their security decisions; not just efficient in threat response, but also ethical in their operations. Let us consciously choose to build agents that reflect our deepest values, amplifying human potential in the SOC and working tirelessly towards a future shaped by our very own “better angels.”

Report

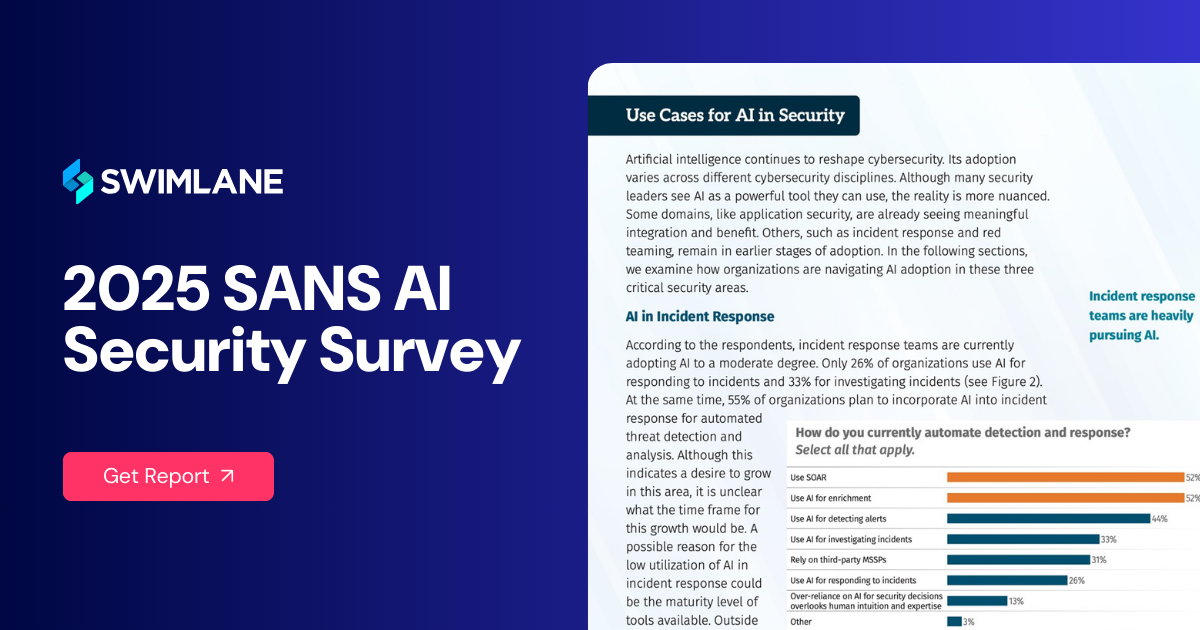

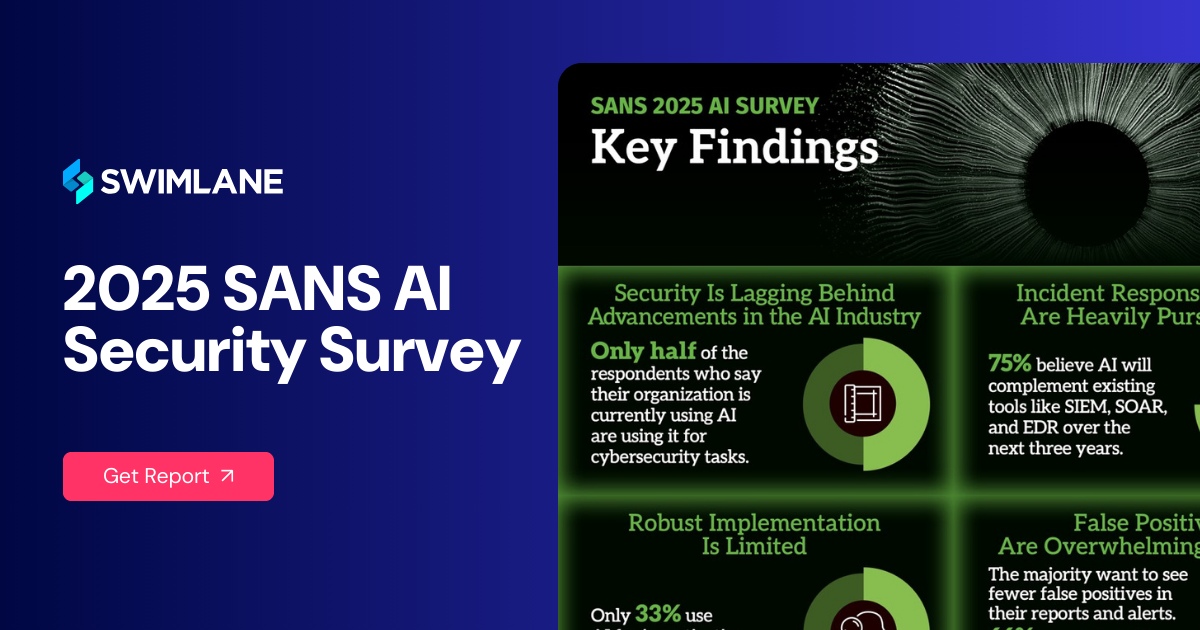

SANS 2025 AI Survey: AI’s Impact on Security Three Years Later

Generative AI (GenAI) and large language models (LLMs) are already here, shaping the cybersecurity landscape.

The 2025 SANS AI Survey, authored by Ahmed Abugharbia and Brandon Evans, examines how organizations are currently utilizing GenAI for security and identifies the problems and threats posed by these new technologies.